Neutron L2 网关 + HP 5930 交换机 OVSDB 集成,用于 VXLAN 桥接和路由

- Get link

- X

- Other Apps

Neutron L2 Gateway是一个新的Openstack项目,作为Neutron服务插件工作。它目前只支持一个用例,即通过启用了OVSDB硬件VTEP的物理交换机将Neutron VxLAN租户网络桥接到物理VLAN网络。

这篇博客将介绍配置Openstack和物理开关以使Neutron L2-GW正常工作的所有步骤。

此集成中使用了 HP 5930 交换机。

Red Hat Openstack Platform 7 (Kilo) 被用作 Openstack 环境。

需要启用 L2 种群机制驱动程序才能使 l2-GW 正常工作。

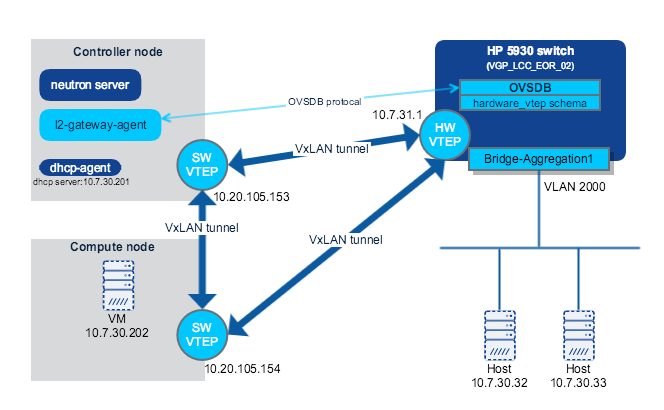

这是网络图:

HP 5930 交换机配置

首先,让我们配置 HP 5930 交换机。

进入系统视图:

<VGP_LCC_EOR_02>system-view

[VGP_LCC_EOR_02]

启用 l2vpn:

l2vpn enable

为 OVSDB 配置被动 TCP 连接,我们在这里使用端口 6632:

ovsdb server ptcp port 6632

启用 OVSDB 服务器:

ovsdb server enable

启用 VTEP 进程:

vtep enable

将 10.7.31.1 配置为 VTEP 源 IP:

tunnel global source-address 10.7.31.1

配置 vtep 访问端口,在我们的示例中,我们使用 Bridge-Aggregation1:

interface Bridge-Aggregation1

vtep access port

现在HP交换机部分配置完成,我们可以转储OVSDB以查看它的外观,从任何Linux机器运行ovsdb-client:

[stack@under ~(stackrc)]$ ovsdb-client dump --pretty tcp:10.7.31.1:6632

Arp_Sources_Local table

_uuid locator src_mac

----- ------- -------

Arp_Sources_Remote table

_uuid locator src_mac

----- ------- -------

Global table

_uuid managers switches

------------------------------------ -------- --------------------------------------

58708319-c85e-44a5-b221-4c2d3cde6004 [] [8f3d25db-f219-443e-9771-1d8e5219b5d9]

Logical_Binding_Stats table

_uuid bytes_from_local bytes_to_local packets_from_local packets_to_local

----- ---------------- -------------- ------------------ ----------------

Logical_Router table

_uuid description name static_routes switch_binding

----- ----------- ---- ------------- --------------

Logical_Switch table

_uuid description name tunnel_key

----- ----------- ---- ----------

Manager table

_uuid inactivity_probe is_connected max_backoff other_config status target

----- ---------------- ------------ ----------- ------------ ------ ------

Mcast_Macs_Local table

MAC _uuid ipaddr locator_set logical_switch

--- ----- ------ ----------- --------------

Mcast_Macs_Remote table

MAC _uuid ipaddr locator_set logical_switch

--- ----- ------ ----------- --------------

Physical_Locator table

_uuid dst_ip encapsulation_type

----- ------ ------------------

Physical_Locator_Set table

_uuid locators

----- --------

Physical_Port table

_uuid description name port_fault_status vlan_bindings vlan_stats

------------------------------------ ----------- --------------------- ----------------- ------------- ----------

28d3da91-7666-492e-9c20-fc960249a7b6 "" "Bridge-Aggregation1" [UP] {} {}

Physical_Switch table

_uuid description management_ips name ports switch_fault_status tunnel_ips tunnels

------------------------------------ ----------- -------------- ---------------- -------------------------------------- ------------------- ------------- -------

8f3d25db-f219-443e-9771-1d8e5219b5d9 "" [] "VGP_LCC_EOR_02" [28d3da91-7666-492e-9c20-fc960249a7b6] [] ["10.7.31.1"] []

Tunnel table

_uuid bfd_config_local bfd_config_remote bfd_params bfd_status local remote

----- ---------------- ----------------- ---------- ---------- ----- ------

Ucast_Macs_Local table

MAC _uuid ipaddr locator logical_switch

--- ----- ------ ------- --------------

Ucast_Macs_Remote table

MAC _uuid ipaddr locator logical_switch

--- ----- ------ ------- --------------

我们可以在表中看到此开关,在表中看到端口。Physical_SwitchPhysical_Port

L2-GW安装和与中子的集成

在控制器节点上,从稳定/千克分支获取最新代码networking-l2gw

git clone -b stable/kilo https://github.com/openstack/networking-l2gw.git

安装方式 :pip

pip install ~/networking-l2gw/

将 l2-gw 服务插件添加到中子服务器:

[root@overcloud-controller-0 ~]# grep ^service_pl /etc/neutron/neutron.conf

service_plugins =router,networking_l2gw.services.l2gateway.plugin.L2GatewayPlugin

将 l2-gw 示例配置文件复制到:/etc/neutron/

[root@overcloud-controller-0 ~]#cp /usr/etc/neutron/l2g* /etc/neutron/

将 HP 5930 OVSDB 连接添加到 是用户定义的物理交换机标识符。l2gateway_agent.iniovsdb1

[root@overcloud-controller-0 ~]# grep ^ovsdb /etc/neutron/l2gateway_agent.ini

ovsdb_hosts = 'ovsdb1:10.7.31.1:6632'

制作neutron-l2gateway-agent.service systemd单元文件,这样我们就可以通过systemctl来控制服务:

[root@overcloud-controller-0 system]# cat /usr/lib/systemd/system/neutron-l2gateway-agent.service

[Unit]

Description=OpenStack Neutron L2 Gateway Agent

After=syslog.target network.target

[Service]

Type=simple

User=neutron

ExecStart=/usr/bin/neutron-l2gateway-agent --config-file /etc/neutron/neutron.conf --config-file /etc/neutron/l2gateway_agent.ini

PrivateTmp=false

KillMode=process

[Install]

WantedBy=multi-user.target

加载我们创建的单元文件:

[root@overcloud-controller-0]# systemctl daemon-reload

停止中子服务:

pcs resource disable neutron-server-clone

更新中子数据库模式:

neutron-l2gw-db-manage --config-file /etc/neutron/neutron.conf upgrade head

Start neutron services:

pcs resource enable neutron-server-clone

Start neutron-l2-gateway service:

systemctl start neutron-l2gateway-agent.service

Now neutron l2-GW is enable, we should be able to see the from :L2 Gateway agentneutron agent-list

[root@overcloud-controller-0 ~]# neutron agent-list

+--------------------------------------+--------------------+------------------------------------+-------+----------------+---------------------------+

| id | agent_type | host | alive | admin_state_up | binary |

+--------------------------------------+--------------------+------------------------------------+-------+----------------+---------------------------+

| 097320d1-9e7c-468a-bcee-948c560b65c9 | Metadata agent | overcloud-controller-0.localdomain | :-) | True | neutron-metadata-agent |

| 0ef52d27-9b91-40d4-b9dd-d24ff4f86ef7 | Open vSwitch agent | overcloud-controller-0.localdomain | :-) | True | neutron-openvswitch-agent |

| 304a3e9b-3a6f-4ae6-b2af-02891fa63d0f | L2 Gateway agent | overcloud-controller-0.localdomain | :-) | True | neutron-l2gateway-agent |

| 4f81e156-e679-4d76-a816-9b976cf9d62f | Open vSwitch agent | overcloud-compute-0.localdomain | :-) | True | neutron-openvswitch-agent |

| 71f7e395-045f-47a2-9575-9976604eac7c | L3 agent | overcloud-controller-0.localdomain | :-) | True | neutron-l3-agent |

| 81f254a4-dbf2-48e2-afb1-9456201c2631 | DHCP agent | overcloud-controller-0.localdomain | :-) | True | neutron-dhcp-agent |

+--------------------------------------+--------------------+------------------------------------+-------+----------------+---------------------------+

Also we can see the physical switch is registered into neutron DB:

MariaDB [ovs_neutron]> select * from physical_switches;

+--------------------------------------+----------------+-----------+------------------+---------------------+

| uuid | name | tunnel_ip | ovsdb_identifier | switch_fault_status |

+--------------------------------------+----------------+-----------+------------------+---------------------+

| 8f3d25db-f219-443e-9771-1d8e5219b5d9 | VGP_LCC_EOR_02 | 10.7.31.1 | ovsdb1 | NULL |

+--------------------------------------+----------------+-----------+------------------+---------------------+

L2-GW usage

Create a neutron network (VxLAN overlay):

[root@overcloud-controller-0 ~(admin)]# neutron net-create test-l2gw

Created a new network:

+---------------------------+--------------------------------------+

| Field | Value |

+---------------------------+--------------------------------------+

| admin_state_up | True |

| id | 49485e07-77db-4d43-8b20-068597f744a5 |

| mtu | 0 |

| name | test-l2gw |

| provider:network_type | vxlan |

| provider:physical_network | |

| provider:segmentation_id | 100 |

| router:external | False |

| shared | False |

| status | ACTIVE |

| subnets | |

| tenant_id | 7128bb366ffd47ffb445965daab45751 |

+---------------------------+--------------------------------------+

We can see neutron randomly allocates VNI ID to this tenant network.

Let's create subnet for it:100

neutron subnet-create --allocation-pool start=10.7.30.201,end=10.7.30.210 test-l2gw 10.7.30.0/24

Launch a VM in this network:

nova boot --image cirros --flavor m1.tiny --nic net-id=49485e07-77db-4d43-8b20-068597f744a5 test-vm

Check VM launching status and IP address allocated:

[root@overcloud-controller-0 ~(admin)]# nova list

+--------------------------------------+---------+--------+------------+-------------+-----------------------+

| ID | Name | Status | Task State | Power State | Networks |

+--------------------------------------+---------+--------+------------+-------------+-----------------------+

| 15e9aefb-cddd-4be6-a74e-a3098e17f26c | test-vm | ACTIVE | - | Running | test-l2gw=10.7.30.202 |

+--------------------------------------+---------+--------+------------+-------------+-----------------------+

We can see VM is running, with IP .10.7.30.202

We can check VXLAN tunnels created on controller and compute nodes:

[root@overcloud-controller-0 ~(admin)]# ovs-vsctl show | grep vxlan -A1

Port "vxlan-0a14699a"

Interface "vxlan-0a14699a"

type: vxlan

options: {df_default="true", in_key=flow, local_ip="10.20.105.153", out_key=flow, remote_ip="10.20.105.154"}

[root@overcloud-compute-0 ~]# ovs-vsctl show | grep vxlan -A1

Port "vxlan-0a146999"

Interface "vxlan-0a146999"

type: vxlan

options: {df_default="true", in_key=flow, local_ip="10.20.105.154", out_key=flow, remote_ip="10.20.105.153"}

So we can see at the moment, both controller and compute node has only 1 tunnel created to each other. This makes VM to DHCP server traffic working.

Now let's create a l2-gateway using client:neutron-l2gw

neutron-l2gw l2-gateway-create --device name="VGP_LCC_EOR_02",interface_names="Bridge-Aggregation1" gw1

Then we can now create a l2-gateway-connection, to bridge tenant network to for VLAN :test-l2gwgw12000

neutron-l2gw l2-gateway-connection-create --default-segmentation-id 2000 gw1 test-l2gw

We can try to ping hosts in VLAN 2000, from controller dhcp namespace and VM.

[root@overcloud-controller-0 ~(admin)]# ip netns exec qdhcp-49485e07-77db-4d43-8b20-068597f744a5 ping 10.7.30.32

PING 10.7.30.32 (10.7.30.32) 56(84) bytes of data.

64 bytes from 10.7.30.32: icmp_seq=61 ttl=64 time=0.493 ms

64 bytes from 10.7.30.32: icmp_seq=62 ttl=64 time=0.400 ms

64 bytes from 10.7.30.32: icmp_seq=63 ttl=64 time=0.443 ms

...

cirros$ ping 10.7.30.33

PING 10.7.30.33 (10.7.30.33): 56 data bytes

64 bytes from 10.7.30.33: seq=0 ttl=64 time=2.007 ms

64 bytes from 10.7.30.33: seq=1 ttl=64 time=1.034 ms

...

cirros$ ping 10.7.30.32

PING 10.7.30.32 (10.7.30.32): 56 data bytes

64 bytes from 10.7.30.32: seq=0 ttl=64 time=1.824 ms

64 bytes from 10.7.30.32: seq=1 ttl=64 time=1.007 ms

...

Pings work!!!

Let's try to check what's making that working under the hood.

Check VXLAN tunnels on controller and compute nodes, we will see tunnels created between them and HP 5930 switch.

[root@overcloud-controller-0 ~(admin)]# ovs-vsctl show | grep vxlan -A1

Port "vxlan-0a14699a"

Interface "vxlan-0a14699a"

type: vxlan

options: {df_default="true", in_key=flow, local_ip="10.20.105.153", out_key=flow, remote_ip="10.20.105.154"}

Port "vxlan-0a071f01"

Interface "vxlan-0a071f01"

type: vxlan

options: {df_default="true", in_key=flow, local_ip="10.20.105.153", out_key=flow, remote_ip="10.7.31.1"}

[root@overcloud-compute-0 ~]# ovs-vsctl show | grep vxlan -A1

Port "vxlan-0a146999"

Interface "vxlan-0a146999"

type: vxlan

options: {df_default="true", in_key=flow, local_ip="10.20.105.154", out_key=flow, remote_ip="10.20.105.153"}

Port "vxlan-0a0713f4"

Interface "vxlan-0a0713f4"

type: vxlan

options: {df_default="true", in_key=flow, local_ip="10.20.105.154", out_key=flow, remote_ip="10.7.31.1"}

Dump the OVSDB of physical switch:

[stack@under ~(stackrc)]$ ovsdb-client dump --pretty tcp:10.7.31.1:6632

Arp_Sources_Local table

_uuid locator src_mac

----- ------- -------

Arp_Sources_Remote table

_uuid locator src_mac

----- ------- -------

Global table

_uuid managers switches

------------------------------------ -------- --------------------------------------

58708319-c85e-44a5-b221-4c2d3cde6004 [] [8f3d25db-f219-443e-9771-1d8e5219b5d9]

Logical_Binding_Stats table

_uuid bytes_from_local bytes_to_local packets_from_local packets_to_local

----- ---------------- -------------- ------------------ ----------------

Logical_Router table

_uuid description name static_routes switch_binding

----- ----------- ---- ------------- --------------

Logical_Switch table

_uuid description name tunnel_key

------------------------------------ ----------- -------------------------------------- ----------

c08ad894-886f-452a-a528-216f36d48958 "test-l2gw" "49485e07-77db-4d43-8b20-068597f744a5" 100

Manager table

_uuid inactivity_probe is_connected max_backoff other_config status target

----- ---------------- ------------ ----------- ------------ ------ ------

Mcast_Macs_Local table

MAC _uuid ipaddr locator_set logical_switch

----------- ------------------------------------ ------ ------------------------------------ ------------------------------------

unknown-dst aeaab3ec-a0ae-4937-8cda-14ca48039be8 "" e542b5fe-70c4-4872-a741-f334d1bf7021 c08ad894-886f-452a-a528-216f36d48958

Mcast_Macs_Remote table

MAC _uuid ipaddr locator_set logical_switch

--- ----- ------ ----------- --------------

Physical_Locator table

_uuid dst_ip encapsulation_type

------------------------------------ --------------- ------------------

de8e7621-023a-4dfe-bc79-1bbd97962c71 "10.20.105.153" "vxlan_over_ipv4"

6047ff6f-7662-44d0-8e49-b1c6afb51e68 "10.20.105.154" "vxlan_over_ipv4"

7e83e09d-2ee3-4c8d-8784-efae6b780c15 "10.7.31.1" "vxlan_over_ipv4"

Physical_Locator_Set table

_uuid locators

------------------------------------ --------------------------------------

e542b5fe-70c4-4872-a741-f334d1bf7021 [7e83e09d-2ee3-4c8d-8784-efae6b780c15]

Physical_Port table

_uuid description name port_fault_status vlan_bindings vlan_stats

------------------------------------ ----------- --------------------- ----------------- ------------------------------------------- ----------

28d3da91-7666-492e-9c20-fc960249a7b6 "" "Bridge-Aggregation1" [UP] {2000=c08ad894-886f-452a-a528-216f36d48958} {}

Physical_Switch table

_uuid description management_ips name ports switch_fault_status tunnel_ips tunnels

------------------------------------ ----------- -------------- ---------------- ---------------------------------------------------------------------------- ------------------- ------------- ----------------------------------------------------------------------------

8f3d25db-f219-443e-9771-1d8e5219b5d9 "" [] "VGP_LCC_EOR_02" [28d3da91-7666-492e-9c20-fc960249a7b6] [] ["10.7.31.1"] [340ae640-ad8c-4dc7-9c61-1f84c9c462aa, 68629911-a3d0-4793-8a06-583b544dd827]

Tunnel table

_uuid bfd_config_local bfd_config_remote bfd_params bfd_status local remote

------------------------------------ -------------------------------------------------------------- ------------------------------------------------------- --------------------------------------------------------------------------------------------------------------------- ------------------------------------------------------------------------------------ ------------------------------------ ------------------------------------

68629911-a3d0-4793-8a06-583b544dd827 {bfd_dst_ip="10.7.31.1", bfd_dst_mac="188:234:250:108:94:141"} {bfd_dst_ip="10.20.105.153", bfd_dst_mac="0:0:0:0:0:0"} {check_tnl_key="0", cpath_down="0", decay_min_rx="100", enable="1", forwarding_if_rx="1", min_rx="100", min_tx="100"} {diagnostic="", forwarding="0", remote_diagnostic="", remote_state=down, state=down} 7e83e09d-2ee3-4c8d-8784-efae6b780c15 de8e7621-023a-4dfe-bc79-1bbd97962c71

340ae640-ad8c-4dc7-9c61-1f84c9c462aa {bfd_dst_ip="10.7.31.1", bfd_dst_mac="188:234:250:108:94:141"} {bfd_dst_ip="10.20.105.154", bfd_dst_mac="0:0:0:0:0:0"} {check_tnl_key="0", cpath_down="0", decay_min_rx="100", enable="1", forwarding_if_rx="1", min_rx="100", min_tx="100"} {diagnostic="", forwarding="0", remote_diagnostic="", remote_state=down, state=down} 7e83e09d-2ee3-4c8d-8784-efae6b780c15 6047ff6f-7662-44d0-8e49-b1c6afb51e68

Ucast_Macs_Local table

MAC _uuid ipaddr locator logical_switch

------------------- ------------------------------------ ------ ------------------------------------ ------------------------------------

"2c:60:0c:c0:97:c9" 1b74b46b-5afb-41ad-ab84-faf1331d0c72 "" 7e83e09d-2ee3-4c8d-8784-efae6b780c15 c08ad894-886f-452a-a528-216f36d48958

"2c:60:0c:c0:a6:c1" 13996ca9-3de0-4bea-b815-036a07aa54d4 "" 7e83e09d-2ee3-4c8d-8784-efae6b780c15 c08ad894-886f-452a-a528-216f36d48958

"2c:60:0c:c0:a9:3b" 9d1c3c28-8ced-4e74-a1a9-bb3a27a41267 "" 7e83e09d-2ee3-4c8d-8784-efae6b780c15 c08ad894-886f-452a-a528-216f36d48958

Ucast_Macs_Remote table

MAC _uuid ipaddr locator logical_switch

------------------- ------------------------------------ ------------- ------------------------------------ ------------------------------------

"fa:16:3e:96:3b:ce" 18dd6787-d5dc-42dc-97eb-285faf5b23ff "10.7.30.202" 6047ff6f-7662-44d0-8e49-b1c6afb51e68 c08ad894-886f-452a-a528-216f36d48958

"fa:16:3e:d1:44:85" 109c752e-1b97-4169-b939-6ada0a8ec703 "10.7.30.201" de8e7621-023a-4dfe-bc79-1bbd97962c71 c08ad894-886f-452a-a528-216f36d48958

From table, we can see 2 VXLAN tunnels created between physical switch and controller and compute nodes.

From table, a logical switch is created with VNI ID(tunnel_key) , then from table, we can see VLAN is bound to that logical switch.

From table, we can see MAC of and , which are VM and dhcp server's MACs.TunnelLogical_Switch100Physical_Port2000Ucast_Macs_Remote10.7.30.20110.7.30.202

Check tunnel interfaces from HP 5930 console:

[VGP_LCC_EOR_02]dis interface Tunnel

Tunnel0

Current state: UP

Line protocol state: UP

Description: created by ovsdb

Bandwidth: 64 kbps

Maximum transmission unit: 1464

Internet protocol processing: Disabled

Last clearing of counters: Never

Tunnel source unknown, destination 10.20.105.153

Tunnel protocol/transport UDP_VXLAN/IP

Tunnel1

Current state: UP

Line protocol state: UP

Description: created by ovsdb

Bandwidth: 64 kbps

Maximum transmission unit: 1464

Internet protocol processing: Disabled

Last clearing of counters: Never

Tunnel source unknown, destination 10.20.105.154

Tunnel protocol/transport UDP_VXLAN/IP

2 Tunnels are shown there as well.

VXLAN routing problem

On HP 5930 switch, for VLAN , we have a L3 IP address working as gateway of this network:2000

[VGP_LCC_EOR_02]display current-configuration interface Vlan-interface 2000

interface Vlan-interface2000

ip address 10.7.30.1 255.255.255.0

After l2-gateway-connection is created, this default GW IP can not be reached from VM, it's because of limitation of TRIDENT 2 chipset in HP 5930 switch, details can be checked from this blog: LAYER-3 SWITCHING OVER VXLAN REVISITED

Workaround of VXLAN routing problem by adding a loopback cable in the switch

To workaround the HW limitation, we could make a loopback cable on HP 5930 switch, bridge out VXLAN to VLAN first, then through the loopback cable, reaching in the L3 GW as normal VLAN routing.

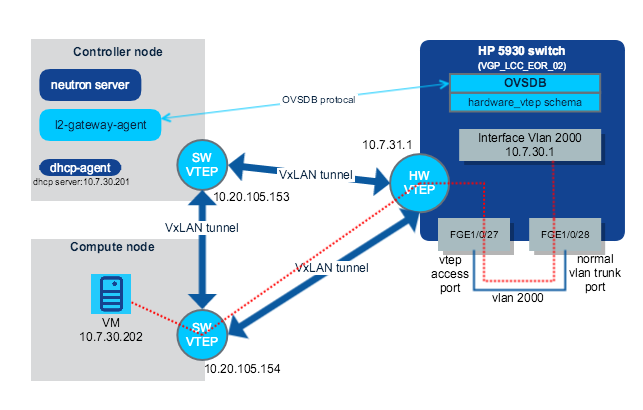

The diagram is:

环回电缆连接在FGE1/0/27和FGE1/0/28之间,这里我们将FGE1/0/27配置为vtep接入端口,FGE1/0/28配置为普通VLAN中继端口。红色虚线表示 VM 和 L3 GW 之间的数据包传输路径。

在 HP 5930 主机上:

[VGP_LCC_EOR_02]dis current-configuration interface FortyGigE 1/0/27

#

interface FortyGigE1/0/27

port link-mode bridge

port link-type trunk

undo port trunk permit vlan 1

vtep access port

#

return

[VGP_LCC_EOR_02]dis current-configuration interface FortyGigE 1/0/28

#

interface FortyGigE1/0/28

port link-mode bridge

port link-type trunk

undo port trunk permit vlan 1

port trunk permit vlan 2000

#

return

我们需要删除以前创建的 l2 网关连接和 l2 网关:

neutron-l2gw l2-gateway-connection-delete 4fb1c9eb-de29-442c-a946-190f5f99cf17

neutron-l2gw l2-gateway-delete gw1

重新创建带接口的 l2 网关:gw1FortyGigE1/0/27

neutron-l2gw l2-gateway-create --device name="VGP_LCC_EOR_02",interface_names="FortyGigE1/0/27" gw1

创建 l2 网关连接,以将租户网络桥接到 VLANtest-l2gwgw12000

neutron-l2gw l2-gateway-connection-create --default-segmentation-id 2000 gw1 test-l2gw

现在尝试从 VM ping L3 GW 10.7.30.1。

$ ping 10.7.30.1

PING 10.7.30.1 (10.7.30.1): 56 data bytes

64 bytes from 10.7.30.1: seq=1 ttl=64 time=1.463 ms

64 bytes from 10.7.30.1: seq=2 ttl=64 time=2.606 ms

64 bytes from 10.7.30.1: seq=3 ttl=64 time=1.036 ms

...

它现在工作!!!

- Get link

- X

- Other Apps

Comments

Post a Comment